At Dynamic Domain, our professional database modelers begin database development process with analyzing the core data requirements to support business process within the scope of potential use of the information system, design conceptual, logical and physical data structure for variety of purpose and finally illustrates database model through industry standard database graphical models such as entity relationship diagram (ERD), Business Process Flowchart etc.

Database Development Process:

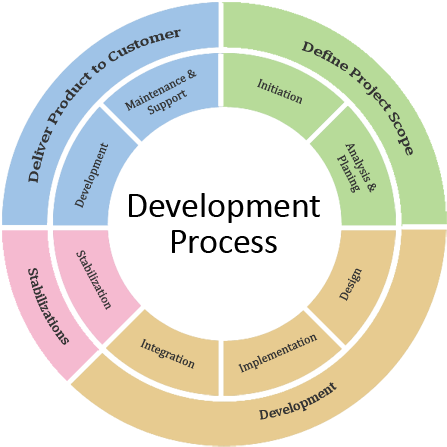

The chief object to database development process is to produce an operational database for an information system. Our professional database modelers begin the three schemas (external, conceptual, and internal) and finally populate (supply with data) the database. Our professionals generally go through the following work flow in regard to design three schemas.

Conceptual Data Modeling:

Our professional database modelers collect the data requirements and produces entity relationship diagrams (ERDs) for the conceptual schema and for each external schema.

Data requirements can have many formats such as interviews with business client, documentation of existing systems, and proposed forms and reports. The conceptual schema should represent all the requirements and formats. In contrast, the external schemas (or views) represent the requirements of a particular usage of the database such as a form or report rather than all requirements.

Thus, external schemas are generally much smaller than the conceptual schema. The conceptual and external schemas follow the rules of the Entity Relationship Model, a graphical representation that depicts things of interest (entities) and relationships among entities.

Physical Database Design:

Our professional database modelers plan out the physical database performance, storage, data security, data restore and backup, replication, cloud back design and data quality at this stage. If a database is distributed, physical design decisions are necessary for each location. An efficient implementation minimizes response time without using too many resources such as disk space and main memory. Because response time is difficult to directly measure, other measures such as the amount of disk input-output activity is often used as a substitute. In the physical database design phase, two important choices are about indexes and data placement. An index is an auxiliary file that can improve performance. For each column of a table, the designer decides whether an index can improve performance. An index can improve performance on retrievals but reduce performance on updates.

Distributed Database Design

Distributed database design involves choices about the location of data and processes so that performance can be improved in the client server architecture. There are three great challenges need to be handled carefully i) Data Fragmentation ii) Data Replication and iii) Data Allocation:

-

Data Fragmentation:

Data fragmentation is a technique used to break up objects. In designing a distributed database, you must decide which portion of the database is to be stored where. One technique used to break up the database into logical units called fragments. Fragmentation information is stored in a distributed data catalogue which the processing computer uses to process a user's request.

As a point of discussion, we can look at data fragmentation in terms of relations or tables. The following matrix describes the different types of fragmentation that can be used.

Horizontal fragmentation: This type of fragmentation refers division of a relation into fragments of rows. Each fragment is stored at a different computer or node, and each fragment contains unique rows. Each horizontal fragment may have a different number of rows, but each fragment must have the same attributes.

Vertical fragmentation: This type of fragmentation refers to the division of a relation into fragments that comprise a collection of attributes. Each vertical fragment must have the same number of rows, but can have different attributes depending on the key.

Mixed fragmentation: This type of fragmentation is a two-step process. First, horizontal fragmentation is done to obtain the necessary rows, then vertical fragmentation is done to divide the attributes among the rows.

-

Data Replication: Data replication is the storage of data copies at multiple sites on the network. Fragment copies can be stored at several sites, thus enhancing data availability and response time. Replicated data is subject to a mutual consistency rule. This rule requires that all copies of the data fragments must be identical and to ensure data consistency among all of the replications.

A database can be either fully replicated, partially replicated or un-replicated.

Full replication: Stores multiple copies of each database fragment at multiple sites. Fully replicated databases can be impractical because of the amount of overhead imposed on the system.

Partial replication: Stores multiple copies of some database fragments at multiple sites. Most DDBMS can handle this type of replication very well.

No replication: Stores each database fragment at a single site. No duplication occurs.

- Data Allocation

Data allocation is a process of deciding where to store the data. It also involves a decision as to which data is stored at what location. Data allocation can be centralized, partitioned or replicated.

Centralised: The entire database is stored at one site. No distribution occurs.

Partitioned: The database is divided into several fragments that are stored at several sites.

Replicated: Copies of one or more database fragments are stored at several sites.

Relational Database Management System

Object Oriented RDBMS

Tools of Database Development:

At Dynamic Domain to improve productivity in developing information systems, computer-aided software engineering (CASE) tools have been adopted. CASE tools can help improve the productivity of information systems professionals working on large projects as well as end users working on small projects. We have tons of example/ studies have provided evidence that CASE tools facilitate improvements in the early phases of systems development leading to lower cost, higher quality, and faster implementations. Our professional database modelers first preference is Microsoft Office Visio Professional if we have the choice to CASE. This is tool provides predefined shapes and connections among the shapes. The connection tools typically allow shapes to be moved while remaining connected as though “glued.” This glue feature provides important flexibility because symbols on a diagram typically are rearranged many times.